Revolutionary Biometric Security: 5 Crucial Advantages And Disadvantages

Revolutionary Biometric Security: 5 Crucial Advantages and Disadvantages

Related Articles: Revolutionary Biometric Security: 5 Crucial Advantages and Disadvantages

- Revolutionary 5 Breakthroughs In Renewable Energy

- Revolutionary 5 Breakthroughs: Personalized Medicine’s Tech-Driven Ascent

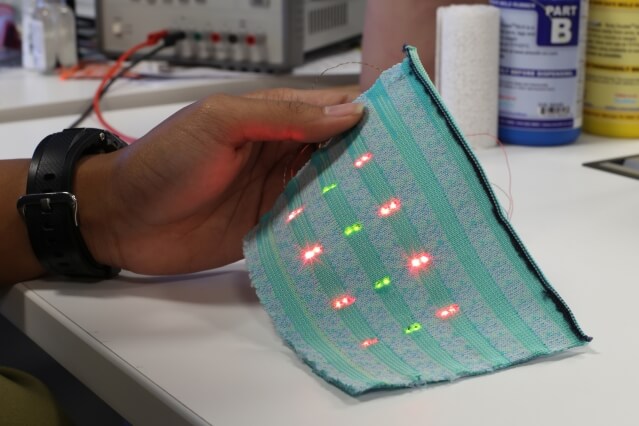

- Revolutionary Smart Fabrics: 5 Key Innovations Transforming Textiles

- Revolutionary Breakthrough: 5 Key Advances In Brain-Computer Interfaces

- Revolutionary Biohacking: 5 Powerful Ways Tech Is Transforming Biology

Introduction

With enthusiasm, let’s navigate through the intriguing topic related to Revolutionary Biometric Security: 5 Crucial Advantages and Disadvantages. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Revolutionary Biometric Security: 5 Crucial Advantages and Disadvantages

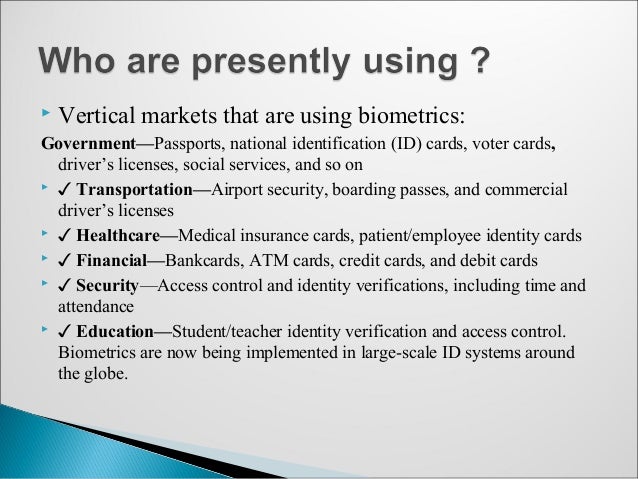

Biometric security, the use of unique biological characteristics for authentication, is rapidly transforming how we secure our digital and physical worlds. From fingerprint scanners on smartphones to iris recognition systems at airports, this technology promises a future where passwords are relics of the past. However, alongside its undeniable potential, biometric security presents significant challenges and risks that demand careful consideration. This article delves into five key advantages and five crucial disadvantages of biometric security, providing a balanced perspective on this powerful and evolving technology.

Five Powerful Advantages of Biometric Security:

-

Enhanced Security and Reduced Fraud: Perhaps the most compelling advantage of biometric security lies in its inherent strength against common security breaches. Unlike passwords, which can be stolen, guessed, or shared, biometric data is uniquely tied to an individual. This makes it significantly harder for unauthorized individuals to gain access to systems or sensitive information. For instance, a stolen fingerprint can’t be used to unlock multiple accounts, unlike a compromised password. This enhanced security translates to a significant reduction in fraud, particularly in financial transactions and identity theft. The inherent difficulty in replicating biometric traits offers a robust defense against sophisticated cyberattacks targeting password databases or employing phishing techniques. The increased security also translates to lower costs associated with managing compromised accounts and mitigating the damage caused by data breaches. Companies and individuals can invest less in traditional security measures, like password resets and fraud investigation, leading to significant cost savings in the long run. The enhanced security also fosters greater trust among users, leading to increased adoption of online services and transactions.

-

Improved User Experience and Convenience: Biometric authentication offers a far more streamlined and user-friendly experience compared to traditional methods. The simple act of scanning a fingerprint or presenting an iris is far quicker and more intuitive than remembering and typing complex passwords. This convenience is especially valuable in situations where speed and ease of access are paramount, such as accessing smartphones, logging into secure networks, or authorizing payments. The elimination of password management headaches also contributes to a more positive user experience. Users no longer need to remember multiple passwords, reset forgotten passwords, or deal with password lockout issues. This simplification enhances productivity and reduces user frustration, ultimately leading to greater user satisfaction and loyalty.

-

Stronger Identity Verification: Biometric authentication provides a much higher level of confidence in verifying an individual’s identity. Traditional methods, such as usernames and passwords, are susceptible to impersonation and identity theft. Biometric data, however, offers a unique and verifiable identifier, significantly reducing the risk of fraudulent access. This is particularly important in high-security applications, such as access control to sensitive facilities, border control, and law enforcement investigations. The unique nature of biometric data allows for more precise identification, leading to improved accuracy in verifying identities and preventing unauthorized access to critical systems and resources. This enhanced identity verification contributes to a more secure and trustworthy environment across various sectors.

-

Increased Efficiency and Productivity: The speed and ease of biometric authentication contribute significantly to increased efficiency and productivity across various applications. In workplaces, biometric access control systems can significantly reduce time spent on security checks, allowing employees to focus on their tasks. Similarly, in healthcare settings, biometric authentication can streamline patient identification and access to medical records, improving the overall efficiency of care delivery. The elimination of password-related delays and the automation of authentication processes contribute to significant time savings, enhancing workflow and productivity across various industries and sectors. This increased efficiency translates into significant cost savings and improved operational effectiveness.

-

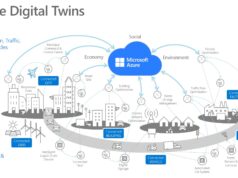

Scalability and Integration: Biometric security systems are highly scalable and can be easily integrated into existing IT infrastructure. This allows organizations to seamlessly incorporate biometric authentication into their security protocols without significant disruption to their operations. The modular nature of biometric systems allows for customization and adaptation to diverse security needs, making them suitable for a wide range of applications and environments. This scalability and integration capability ensure that biometric security solutions can effectively address the security needs of organizations of all sizes, from small businesses to large multinational corporations. The seamless integration with existing systems reduces the complexity and costs associated with implementing new security measures.

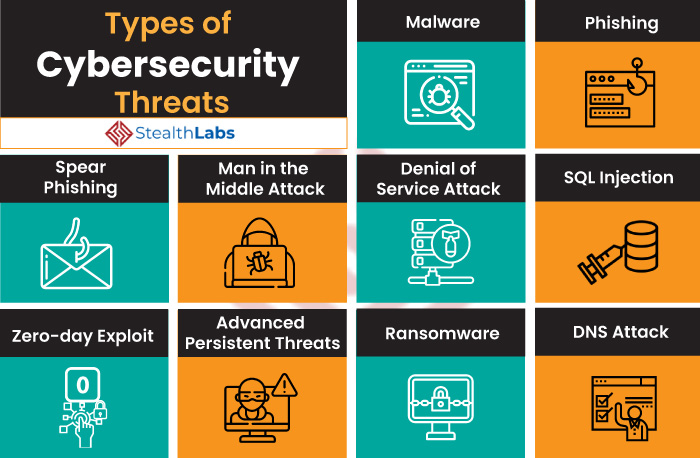

Five Critical Disadvantages of Biometric Security:

-

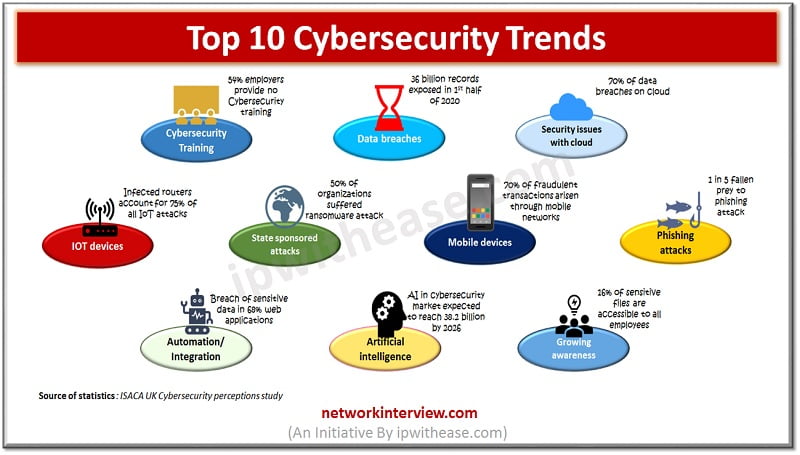

Privacy Concerns and Data Breaches: One of the most significant concerns surrounding biometric security is the potential for privacy violations. Biometric data is highly sensitive, and its unauthorized access or misuse can have severe consequences. Data breaches involving biometric information can lead to identity theft, fraud, and other serious crimes. The irreversible nature of biometric data further exacerbates the risk, as compromised data cannot be simply changed or reset like passwords. The potential for government surveillance and misuse of biometric data is another significant concern, raising ethical and legal questions about data collection, storage, and usage.

-

Accuracy and Reliability Issues: While biometric technologies have advanced significantly, they are not always perfectly accurate. Factors such as environmental conditions, user behavior, and the quality of the biometric sensor can affect the accuracy of the authentication process. False positives (incorrectly accepting an unauthorized user) and false negatives (incorrectly rejecting an authorized user) can occur, leading to security vulnerabilities and user frustration. These inaccuracies can have serious implications in high-security applications, where even a small percentage of errors can have significant consequences. The need for constant calibration and maintenance of biometric systems also adds to the operational complexity and costs.

-

Cost of Implementation and Maintenance: Implementing and maintaining biometric security systems can be expensive. The initial investment in hardware, software, and infrastructure can be significant, particularly for large-scale deployments. Ongoing maintenance, including software updates, sensor calibration, and system upgrades, also adds to the overall cost. The need for specialized personnel to manage and maintain the systems further increases the operational expenses. These costs can be a significant barrier to adoption, particularly for smaller organizations with limited budgets.

-

Vulnerability to Spoofing and Attacks: Despite the inherent strength of biometric data, biometric systems are not immune to sophisticated attacks. Techniques such as fingerprint spoofing, iris spoofing, and deepfake technology can be used to circumvent biometric security measures. The development of increasingly sophisticated spoofing techniques poses a significant challenge to the security of biometric systems. The need for continuous innovation and adaptation to counter new attacks adds to the ongoing costs and complexity of managing biometric security.

-

Ethical and Legal Considerations: The use of biometric technology raises several ethical and legal concerns. Questions surrounding data ownership, data security, and the potential for misuse of biometric data need careful consideration. The lack of clear legal frameworks and regulations governing the collection, storage, and use of biometric data poses a significant challenge. Issues related to consent, data privacy, and the potential for discrimination based on biometric characteristics require careful consideration and robust legal safeguards. The lack of standardization and interoperability between different biometric systems also adds to the complexity of managing biometric data across various applications and organizations.

In conclusion, biometric security offers significant advantages in terms of enhanced security, improved user experience, and increased efficiency. However, it also presents significant challenges related to privacy, accuracy, cost, vulnerability, and ethical considerations. A balanced approach that carefully weighs the benefits and risks is crucial for the responsible and effective implementation of biometric security solutions. The future of biometric security lies in the development of more accurate, reliable, and secure technologies, coupled with robust legal and ethical frameworks to protect individual privacy and rights.

Closure

Thus, we hope this article has provided valuable insights into Revolutionary Biometric Security: 5 Crucial Advantages and Disadvantages. We appreciate your attention to our article. See you in our next article!

google.com