Revolutionary Tech: 5 Crucial Ways Technology Transforms Disaster Management

Revolutionary Tech: 5 Crucial Ways Technology Transforms Disaster Management

Related Articles: Revolutionary Tech: 5 Crucial Ways Technology Transforms Disaster Management

- Revolutionary Breakthrough: 5 Key Advances In Brain-Computer Interfaces

- Revolutionary Leap: 5 Key Aspects Of The Autonomous Retail Store Revolution

- Critical Cybersecurity Threats: 5 Powerful Trends To Master

- Revolutionary Biometric Security: 5 Crucial Advantages And Disadvantages

- Amazing Predictive Analytics: 5 Ways To Revolutionize Your Business

Introduction

With great pleasure, we will explore the intriguing topic related to Revolutionary Tech: 5 Crucial Ways Technology Transforms Disaster Management. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Revolutionary Tech: 5 Crucial Ways Technology Transforms Disaster Management

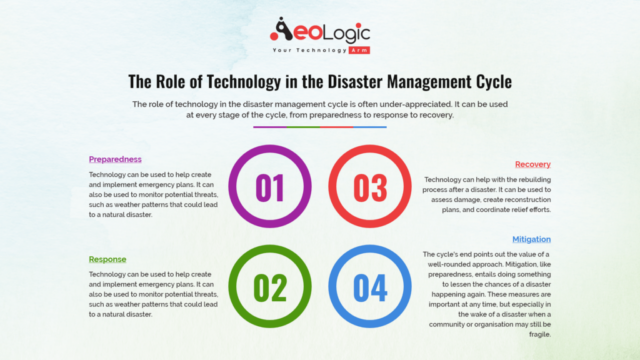

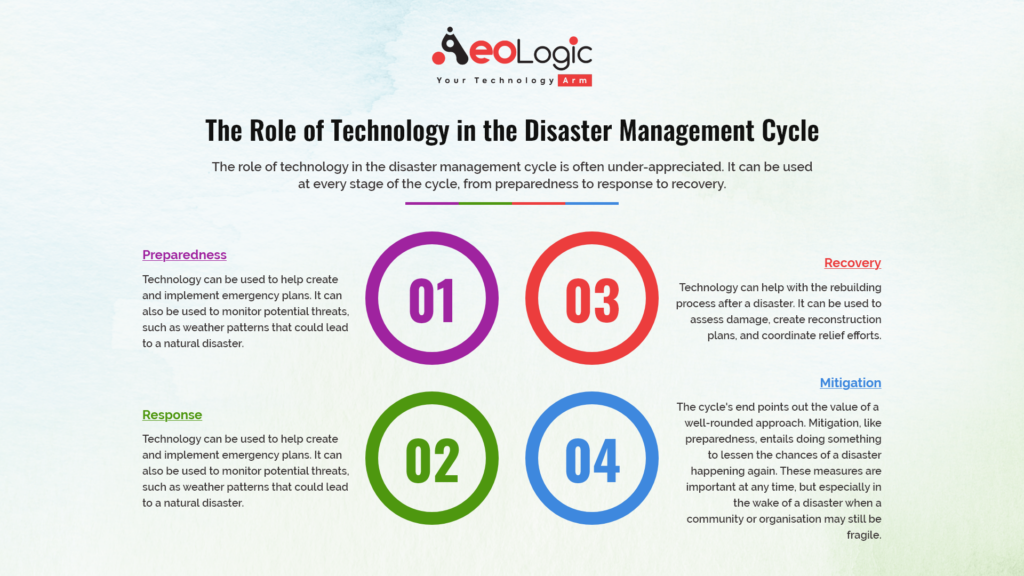

Disasters, whether natural or human-made, strike with devastating force, leaving communities reeling and infrastructure crippled. The scale and complexity of these events demand rapid, efficient, and coordinated responses. For decades, disaster management relied heavily on traditional methods, often proving inadequate in the face of overwhelming challenges. However, the integration of technology has fundamentally reshaped the landscape of disaster response, offering unprecedented capabilities to predict, prepare for, mitigate, respond to, and recover from these catastrophic events. This article will explore five crucial ways technology is revolutionizing disaster management, highlighting its transformative potential and underscoring the need for continued innovation.

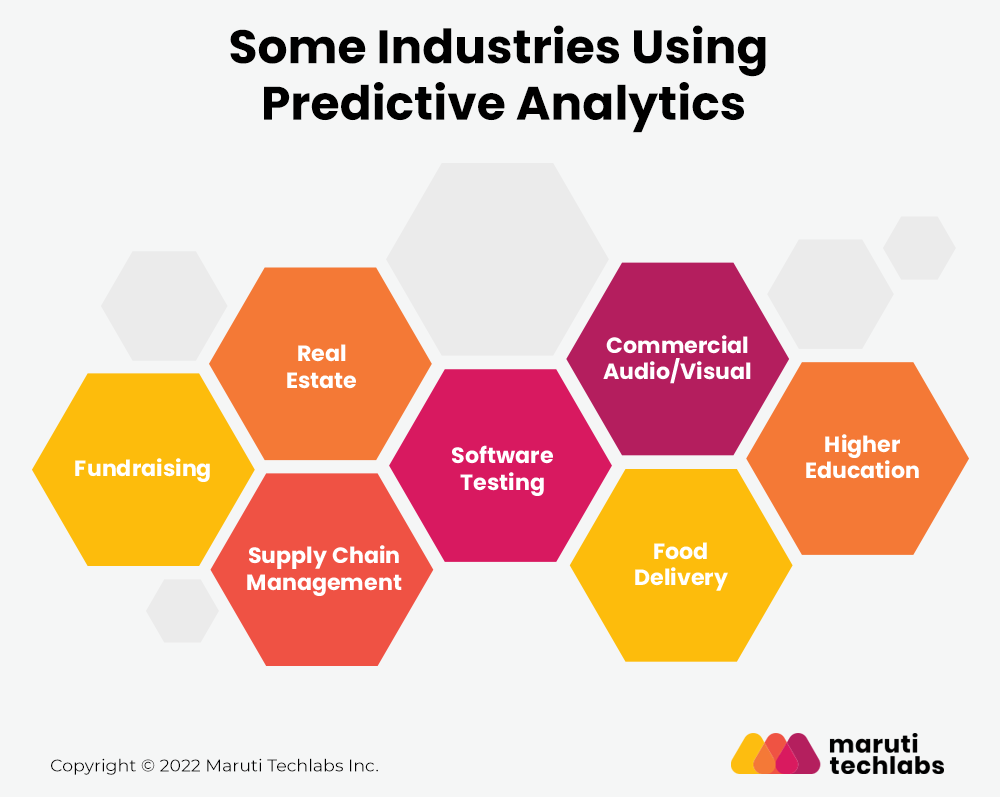

1. Predictive Analytics and Early Warning Systems: Forewarned is Forearmed

One of the most significant advancements in disaster management is the development of sophisticated predictive analytics and early warning systems. These systems leverage vast amounts of data from diverse sources, including meteorological satellites, seismic sensors, hydrological monitoring networks, and social media feeds. By analyzing these data streams using advanced algorithms, including machine learning and artificial intelligence (AI), it’s possible to generate accurate predictions about impending disasters, such as hurricanes, earthquakes, floods, and wildfires.

For instance, sophisticated weather models can now predict the intensity and trajectory of hurricanes with significantly greater accuracy than ever before, providing valuable lead time for evacuation planning and resource allocation. Similarly, AI-powered systems can analyze seismic data to provide early warnings of earthquakes, potentially giving populations crucial seconds or even minutes to take protective action. These early warning systems are not just about predicting the event; they are also about disseminating that information effectively to the population at risk. This involves utilizing multiple communication channels, including mobile phone alerts, public address systems, and social media platforms, to ensure widespread reach and comprehension.

The accuracy and timeliness of these predictions are crucial. False alarms can lead to complacency and a diminished response to genuine threats, while delayed warnings can have catastrophic consequences. Therefore, continuous improvement and validation of these predictive models are essential to ensure their effectiveness and build public trust. Furthermore, the accessibility of these systems, particularly in vulnerable and underserved communities, is a key aspect of maximizing their impact.

2. Geographic Information Systems (GIS) and Mapping: A Clear Picture of the Crisis

Geographic Information Systems (GIS) have become indispensable tools in disaster management, providing a comprehensive visual representation of the affected area. GIS integrates various data layers, including topography, infrastructure, population density, and damage assessments, into interactive maps. This allows responders to quickly understand the scope of the disaster, identify areas of greatest need, and optimize resource allocation.

During a disaster, GIS maps can pinpoint locations of trapped individuals, damaged infrastructure, and critical resources, such as hospitals and shelters. This real-time situational awareness empowers first responders to navigate effectively, prioritize rescue efforts, and coordinate relief operations. Furthermore, post-disaster, GIS plays a crucial role in damage assessment, enabling authorities to quickly quantify the extent of destruction, prioritize recovery efforts, and guide the allocation of aid.

The use of drones and aerial imagery enhances the capabilities of GIS. Drones equipped with high-resolution cameras can capture detailed images of affected areas, providing valuable information that can be integrated into GIS maps. This is particularly useful in areas that are difficult to access by ground vehicles. The integration of satellite imagery also provides a broader perspective, enabling the monitoring of large-scale events and the assessment of damage across vast regions.

3. Communication and Coordination: Breaking Down Silos

Effective communication and coordination among various stakeholders are vital for a successful disaster response. Technology plays a crucial role in breaking down communication silos and facilitating seamless collaboration between different agencies, organizations, and individuals.

Mobile communication technologies, such as satellite phones and two-way radios, ensure connectivity even in areas with damaged infrastructure. Social media platforms can be used to disseminate information to the public, solicit help from volunteers, and coordinate relief efforts. Dedicated communication platforms, such as emergency management systems, enable secure and efficient information sharing among responders.

These technologies are not only important for disseminating information but also for collecting it. Citizen reporting through social media and mobile applications can provide valuable real-time information about the situation on the ground, allowing responders to adapt their strategies accordingly. This participatory approach enhances the responsiveness and effectiveness of disaster management.

However, challenges remain in ensuring interoperability between different communication systems. A lack of standardization can hinder the seamless exchange of information, leading to delays and inefficiencies. Therefore, the development of common standards and protocols is essential to enhance the effectiveness of communication and coordination during disasters.

4. Robotics and Automation: Reaching the Unreachable

In the aftermath of a disaster, accessing affected areas can be extremely hazardous and challenging. Robotics and automation technologies are increasingly being used to overcome these challenges, providing capabilities that extend human reach and reduce risk.

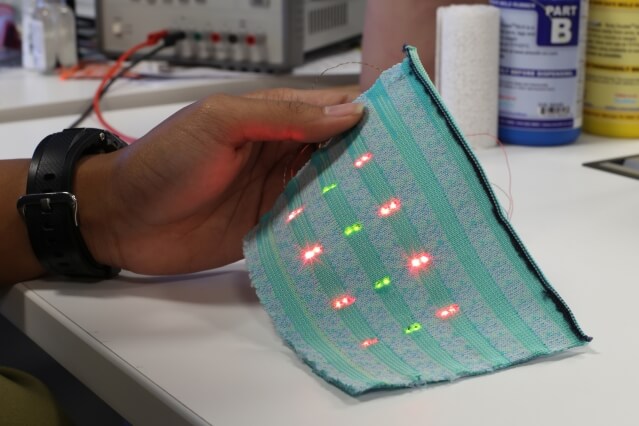

Unmanned aerial vehicles (UAVs, or drones) can be deployed to survey damaged areas, assess the extent of destruction, and locate survivors. Robots can be used to navigate dangerous environments, such as collapsed buildings or contaminated areas, performing tasks such as search and rescue, debris removal, and infrastructure inspection. Automated systems can also be used to manage logistics, such as distributing supplies and coordinating transportation.

The use of robotics and automation is not only enhancing the efficiency and safety of disaster response, but it is also improving the speed and effectiveness of recovery efforts. By automating repetitive and dangerous tasks, responders can focus their efforts on more critical activities, such as providing medical assistance and supporting survivors.

5. Big Data Analytics and Post-Disaster Recovery: Learning from the Past

Big data analytics play a vital role in analyzing the vast amounts of data generated during and after a disaster. This data includes information from various sources, such as sensor networks, social media, and government databases. By analyzing this data, it’s possible to identify patterns, trends, and insights that can improve future disaster preparedness and response.

For example, analyzing social media data can help identify areas where needs are most urgent and where resources should be prioritized. Analyzing sensor data can help understand the impact of a disaster on infrastructure and the environment. Post-disaster, big data analytics can be used to assess the effectiveness of response efforts and identify areas for improvement.

This data-driven approach to disaster management allows for continuous learning and improvement. By analyzing past events, it’s possible to develop more effective strategies for predicting, preparing for, responding to, and recovering from future disasters. This iterative process of learning and improvement is essential for enhancing resilience and minimizing the impact of future disasters.

Conclusion:

Technology has become an indispensable tool in disaster management, transforming the way we predict, prepare for, respond to, and recover from these catastrophic events. From predictive analytics and early warning systems to robotics and big data analytics, technology offers a powerful arsenal of tools to enhance resilience and minimize the impact of disasters. However, the effective utilization of technology requires careful planning, coordination, and investment. This includes developing robust infrastructure, establishing interoperability between different systems, and ensuring equitable access to technology for all communities, particularly those most vulnerable to the impacts of disasters. The future of disaster management lies in harnessing the full potential of technology to build more resilient and safer communities. The ongoing development and refinement of these technologies, coupled with effective strategies for their deployment, will be crucial in mitigating the devastating consequences of future disasters.

Closure

Thus, we hope this article has provided valuable insights into Revolutionary Tech: 5 Crucial Ways Technology Transforms Disaster Management. We hope you find this article informative and beneficial. See you in our next article!

google.com