Brilliant Revolution: 5 Ways The Internet Of Things Is Transforming Our World

Brilliant Revolution: 5 Ways the Internet of Things is Transforming Our World

Related Articles: Brilliant Revolution: 5 Ways the Internet of Things is Transforming Our World

- Transformative Wearable Tech

- Amazing 5 Ways Robots Are Revolutionizing Our Daily Lives

- Revolutionary 5G: Transforming Global Connectivity

- Astonishing Quantum Leap: 5 Key Breakthroughs Revolutionizing Computing

- Revolutionary AI Innovations

Introduction

With enthusiasm, let’s navigate through the intriguing topic related to Brilliant Revolution: 5 Ways the Internet of Things is Transforming Our World. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Brilliant Revolution: 5 Ways the Internet of Things is Transforming Our World

The Internet of Things (IoT) is no longer a futuristic fantasy; it’s a rapidly unfolding reality reshaping our lives in profound ways. Billions of devices, from smartwatches and refrigerators to industrial sensors and self-driving cars, are now connected, communicating, and exchanging data, creating a complex web of interconnectedness with the potential to revolutionize industries, improve efficiency, and enhance our daily lives. However, this powerful technology also presents significant challenges that must be addressed to ensure its responsible and beneficial deployment. This article will explore five key areas where the IoT is making a tangible difference, highlighting both its transformative potential and the critical considerations for its future development.

1. Smart Homes and Enhanced Living:

The most visible impact of the IoT is in the realm of smart homes. Imagine a home that anticipates your needs before you even articulate them. This is the promise of the connected home, where devices seamlessly interact to optimize comfort, security, and energy efficiency. Smart thermostats learn your preferences and adjust temperatures accordingly, reducing energy waste. Smart lighting systems automate illumination based on occupancy and natural light, saving energy and enhancing ambiance. Security systems integrate with cameras, sensors, and smart locks to provide comprehensive protection, alerting you to potential threats in real-time. Smart appliances, from refrigerators that track inventory to washing machines that optimize cycles, streamline household chores and improve resource management. These individual advancements, when integrated into a cohesive ecosystem, create a significantly enhanced living experience, offering increased convenience, improved safety, and reduced environmental impact. However, the seamless integration of these devices requires robust cybersecurity measures to prevent unauthorized access and data breaches, a critical consideration for widespread adoption. The potential for data privacy violations and the ethical implications of constantly monitored homes remain crucial areas of ongoing discussion and development.

2. Revolutionizing Healthcare:

The IoT is revolutionizing healthcare, providing opportunities for improved patient care, more efficient operations, and the development of innovative treatments. Wearable devices monitor vital signs, activity levels, and sleep patterns, providing valuable data for personalized healthcare management. Remote patient monitoring systems allow healthcare providers to track patients’ conditions remotely, enabling early intervention and preventing hospital readmissions. Smart insulin pumps and other connected medical devices deliver precise medication dosages, improving treatment outcomes for chronic conditions. In hospitals, IoT-enabled systems optimize resource allocation, track medical equipment, and streamline workflows, improving efficiency and patient safety. The potential for early disease detection and personalized medicine through continuous data collection is transformative. However, the security and privacy of sensitive patient data are paramount. Robust cybersecurity protocols and strict data governance frameworks are essential to protect patient confidentiality and prevent the misuse of personal health information. Furthermore, ensuring equitable access to these technologies and addressing potential disparities in access to care remain significant challenges.

3. Transforming Industries and Optimizing Supply Chains:

The IoT is profoundly impacting industries, offering significant opportunities for increased efficiency, reduced costs, and enhanced productivity. In manufacturing, connected sensors monitor equipment performance, predict maintenance needs, and optimize production processes. This predictive maintenance reduces downtime, improves efficiency, and lowers operational costs. In logistics and supply chain management, IoT-enabled tracking devices monitor the movement of goods, providing real-time visibility into the supply chain. This enhanced visibility improves inventory management, optimizes delivery routes, and reduces the risk of delays or disruptions. In agriculture, smart sensors monitor soil conditions, weather patterns, and crop health, enabling precision farming techniques that optimize resource utilization and improve yields. The application of IoT in these sectors leads to significant economic benefits, including reduced waste, improved quality control, and increased profitability. However, the integration of IoT technologies across various industrial systems requires significant investment in infrastructure and expertise. Furthermore, the potential impact on employment due to automation needs careful consideration and proactive measures to mitigate job displacement.

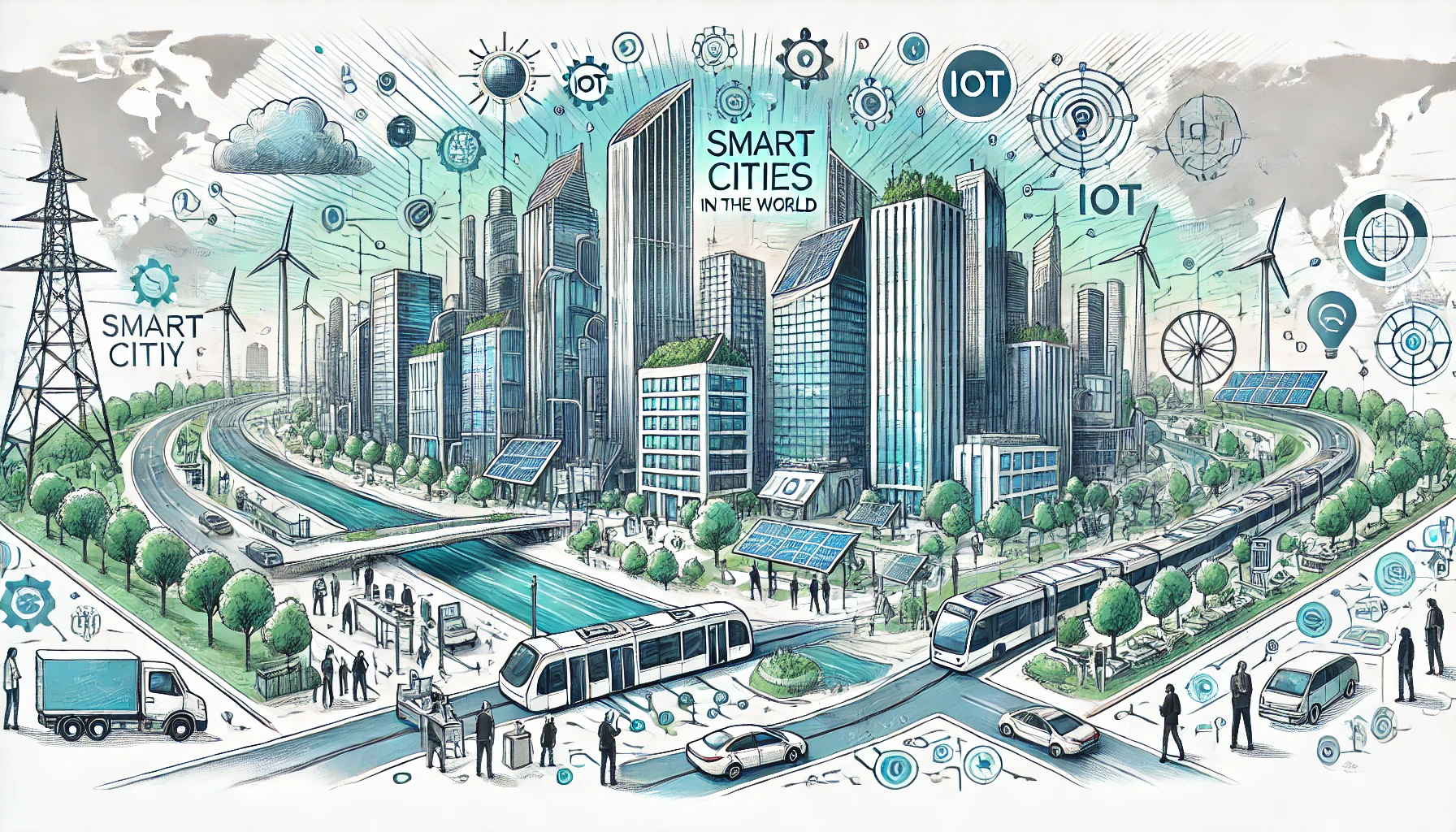

4. Smart Cities and Improved Urban Living:

The IoT is playing a crucial role in the development of smart cities, improving urban infrastructure and enhancing the quality of life for citizens. Smart streetlights adjust brightness based on traffic and pedestrian activity, reducing energy consumption and improving safety. Smart parking systems provide real-time information on parking availability, reducing congestion and improving traffic flow. Smart waste management systems monitor fill levels in bins, optimizing collection routes and reducing waste buildup. Environmental sensors monitor air quality, water levels, and other environmental factors, providing data for informed decision-making and environmental protection. The integration of these systems creates a more efficient, sustainable, and responsive urban environment. However, the implementation of smart city initiatives requires significant investment in infrastructure, data management, and cybersecurity. The potential for data privacy concerns and the need for transparent data governance frameworks are crucial considerations for the ethical and responsible development of smart cities. Furthermore, ensuring equitable access to the benefits of smart city technologies for all citizens is a critical goal.

5. Enhanced Transportation and Logistics:

The IoT is transforming the transportation sector, from individual vehicles to entire logistics networks. Connected cars provide real-time traffic information, improve safety features, and optimize driving routes. Autonomous vehicles utilize sensors and data analytics to navigate roads and improve traffic flow, offering the potential for increased safety and efficiency. In logistics, IoT-enabled tracking devices monitor the location and condition of goods throughout the transportation process, ensuring timely delivery and reducing the risk of loss or damage. The integration of IoT technologies in transportation is leading to significant improvements in efficiency, safety, and sustainability. However, the widespread adoption of autonomous vehicles raises questions about safety regulations, liability, and ethical considerations. The cybersecurity of connected vehicles is also a critical concern, as vulnerabilities could be exploited to compromise vehicle control or steal sensitive data. The integration of IoT technologies into transportation systems requires careful consideration of these challenges to ensure safe and reliable operation.

Challenges and Considerations:

While the potential benefits of the IoT are immense, its widespread adoption also presents significant challenges that must be addressed. These include:

-

Security: The interconnected nature of IoT devices creates a vast attack surface, making them vulnerable to cyberattacks. Robust security measures, including encryption, authentication, and access control, are essential to protect against unauthorized access and data breaches.

-

Privacy: The collection and use of personal data by IoT devices raise significant privacy concerns. Clear data governance frameworks and transparent data handling practices are necessary to protect user privacy and prevent the misuse of personal information.

-

Interoperability: The lack of standardization across different IoT devices and platforms can hinder interoperability and limit the potential benefits of interconnected systems. The development of open standards and interoperability protocols is crucial for the seamless integration of different devices and platforms.

-

Scalability: The sheer number of IoT devices and the volume of data generated pose significant challenges for scalability and data management. Efficient data storage, processing, and analysis techniques are needed to handle the massive amounts of data generated by IoT networks.

Ethical Considerations: The use of IoT technologies raises several ethical considerations, including the potential for bias in algorithms, the impact on employment, and the implications for data ownership and control. Careful consideration of these ethical implications is necessary to ensure the responsible development and deployment of IoT technologies.

Conclusion:

The Internet of Things is a powerful technology with the potential to transform our world in countless ways. From smart homes and healthcare to industrial automation and smart cities, the IoT is already making a tangible difference in our lives. However, realizing the full potential of the IoT requires addressing the significant challenges related to security, privacy, interoperability, scalability, and ethics. By proactively addressing these challenges and fostering collaboration among stakeholders, we can harness the transformative power of the IoT to create a more efficient, sustainable, and equitable world. The future of the IoT is bright, but its success depends on our ability to navigate the complexities and challenges that lie ahead, ensuring that this powerful technology is used responsibly and for the benefit of all.

Closure

Thus, we hope this article has provided valuable insights into Brilliant Revolution: 5 Ways the Internet of Things is Transforming Our World. We appreciate your attention to our article. See you in our next article!

google.com